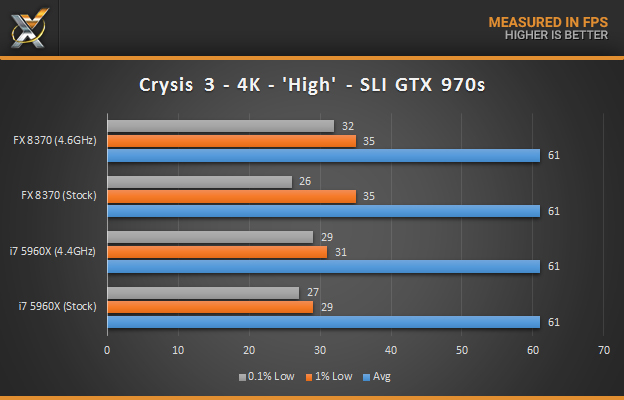

ULTRA HIGH-END: SLI ASUS STRIX GTX 970 – 4K Crysis 3 Crytek’s final installment in the Crysis trilogy is easily their most graphically ambitious release, and even though it is now a few years old, it still represents the bleeding-edge in graphical fidelity. We test on “High” with FXAA.  Starting off our 4K benchmarks, we can see that Crysis 3 plays nearly the same on all configurations with a slightly worse frame time on our 5960X. However, once overclocked our FX-8370 delivers the best experience, never dropping below 30 FPS in terms of frame time variance. Witcher 3 The Witcher 3 is definitely one of the most highly anticipated game releases this year, and as the follow-up to developer CD Projekt RED’s The Witcher 2 – which was one of the most graphically intense PC game when it was released – it has some big shoes to fill. We test on “High” with NVIDIA HairWorks off, and post-processing set to “High” with FXAA enabled.

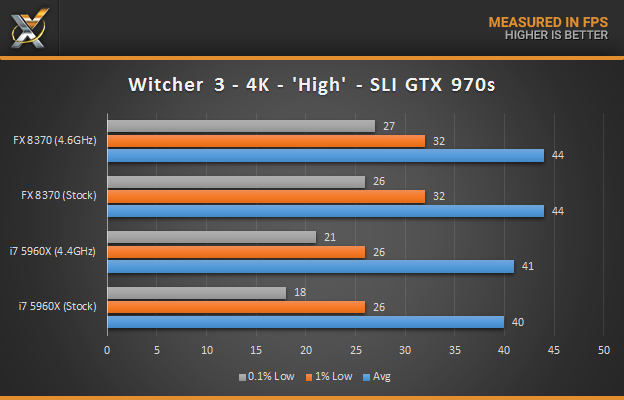

Starting off our 4K benchmarks, we can see that Crysis 3 plays nearly the same on all configurations with a slightly worse frame time on our 5960X. However, once overclocked our FX-8370 delivers the best experience, never dropping below 30 FPS in terms of frame time variance. Witcher 3 The Witcher 3 is definitely one of the most highly anticipated game releases this year, and as the follow-up to developer CD Projekt RED’s The Witcher 2 – which was one of the most graphically intense PC game when it was released – it has some big shoes to fill. We test on “High” with NVIDIA HairWorks off, and post-processing set to “High” with FXAA enabled.  Above we can see that the FX-8370 is about 10% faster in terms of average FPS at both stock and while overclocked. Frame time variance is also much better although both CPUs do drop below 30 FPS, which can lead to some noticeable stutter. Still, the FX-8370 does deliver a smoother experience overall. Tomb Raider (2013) The most recent iteration of Crystal Dynamic’s long-running series, Tomb Raider represents one of the best-looking titles on PC to date. Its varied landscapes and beautiful organic environments create a quite graphically demanding title. We test this game on “Ultimate” with TressFX and FXAA.

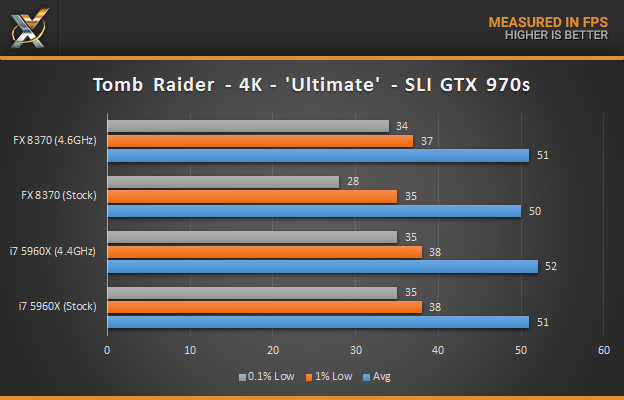

Above we can see that the FX-8370 is about 10% faster in terms of average FPS at both stock and while overclocked. Frame time variance is also much better although both CPUs do drop below 30 FPS, which can lead to some noticeable stutter. Still, the FX-8370 does deliver a smoother experience overall. Tomb Raider (2013) The most recent iteration of Crystal Dynamic’s long-running series, Tomb Raider represents one of the best-looking titles on PC to date. Its varied landscapes and beautiful organic environments create a quite graphically demanding title. We test this game on “Ultimate” with TressFX and FXAA.  Tomb Raider is a title that is definitely not very CPU-bound, and here it shows once again. Performance differences are less than 1% in all instances, except for our stock AMD configuration which displays a slightly lower drop in frame time. Still, all configurations played the game fairly smoothly. Grand Theft Auto V One of the most highly-anticipated releases for PC, GTA V is the latest installment of Rockstar’s long-running genre-defining open-world shooter, and it is their most graphically demanding title yet! with massive city that’s brought to life with immense detail. We test on “Very High” with all “Advanced Graphics” and MSAA options turned off with FXAA enabled.

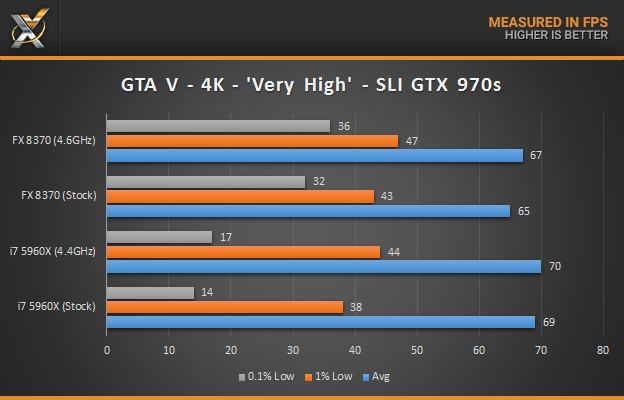

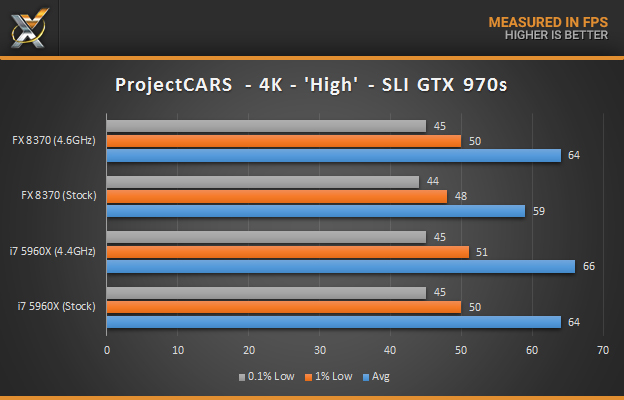

Tomb Raider is a title that is definitely not very CPU-bound, and here it shows once again. Performance differences are less than 1% in all instances, except for our stock AMD configuration which displays a slightly lower drop in frame time. Still, all configurations played the game fairly smoothly. Grand Theft Auto V One of the most highly-anticipated releases for PC, GTA V is the latest installment of Rockstar’s long-running genre-defining open-world shooter, and it is their most graphically demanding title yet! with massive city that’s brought to life with immense detail. We test on “Very High” with all “Advanced Graphics” and MSAA options turned off with FXAA enabled.  Very interesting results. We can see that in terms of average FPS the 5960X outpaces the AMD chip by at least 5% (about 3-4 FPS). However, looking at our frame time variance it is much worse, showing what would be a fairly sutter-y experience. This is exactly the reason these metrics are so important as they can reveal hidden performance issues. Project CARS Project CARS is easily one of the biggest titles out there in racing sims, with realistic weather conditions, advanced lighting effects, and physics. Slightly Mad Studios has had a pedigree in making racing games and this one is no slouch. We test on Nurmburg Ring in ‘Stormy’ weather conditions at “High” settings with all forms of anti-aliasing turned off.

Very interesting results. We can see that in terms of average FPS the 5960X outpaces the AMD chip by at least 5% (about 3-4 FPS). However, looking at our frame time variance it is much worse, showing what would be a fairly sutter-y experience. This is exactly the reason these metrics are so important as they can reveal hidden performance issues. Project CARS Project CARS is easily one of the biggest titles out there in racing sims, with realistic weather conditions, advanced lighting effects, and physics. Slightly Mad Studios has had a pedigree in making racing games and this one is no slouch. We test on Nurmburg Ring in ‘Stormy’ weather conditions at “High” settings with all forms of anti-aliasing turned off.  As we can see above, performance is very similar; at stock we have a different of just above 8% with the 5960X winning out. Once overclocked the gap is decreased to just about 3% in terms of average FPS, with frame time variance being nearly identical.

As we can see above, performance is very similar; at stock we have a different of just above 8% with the 5960X winning out. Once overclocked the gap is decreased to just about 3% in terms of average FPS, with frame time variance being nearly identical.

Technology X Tomorrow's Technology Today!

Technology X Tomorrow's Technology Today!

As a fellow reviewer, I appreciate the many hours of work that went into this

. Thanks for opening people’s eyes.

Thanks a lot Jason. I’m a huge fan of your work so that means a lot coming from you.

Your review is misleading and I’ll informed due to the reasoning’s I have listed in the chat room below.

On the other hand I as a former fellow reviewer call this benchmark unprofessional and misleading. First of all you are comparing a $1k CPU with a $190 one. Why not compare it with i5 and i7? Secondly,if you have done any benchmarking (proper benchmarking) you would have noticed that most games that support multicore cpus, use only quad core CPUs efficiently in Intel’s case. In AMD’s case its FX6300. Shame on you sir (Donny Stanley) for being lazy and for creating such low quality click bates.

Well, sorry to disappoint you then.

Former fellow reviewer? For what site? Some random no-name forum?

The title of this article is “AMD vs Intel – Our 8-Core CPU Gaming Performance Showdown!”, so what place does an i5 or quad-core i7 have here? It’s not rocket science. There are tons of other reviews that talk about those cases, but this one is purely about octocore CPU’s, so the differing price ranges of the chips is totally irrelevant.

AMD vs Intel? Shame about the title!

And yes, is unprofessional.

Except the AMD CPU is not really a true octa core cpu since each core shares a fetcher and decoder, FPU and Its Cache. This is a 8 thread “4 core” Cpu Vs a True 8 core with 16 thread CPU. Unless you have more the 3 Top of the line GPU’s in your system the 5960X is a waste and will be an inferior CPU to the 4 core intel CPU’s with 8 threads in gaming due to lower Single core performance and clock speeds so yes this review is very misleading.

And the review article shows that a $190 CPU can keep up with a $1000 CPU in games. How is it unprofessional to point that out?

Yeah people have $1000 CPU’s connected to a GTX 960 running at 1080P, c’mon. Most of these benchmarks are GPU limited. If you’re going to do that then run up an Intel CPU at about the same price..

Bang that’s it

Here is why:

https://www.youtube.com/watch?v=PgejkSWzvNs&list=PLyReHG5dDxXWxtuArwVgQkVAv6dppbbEp

And here is a better comparison since the AMD Cpu only has 8 threads not 16 and it also has a higher clock speed due to this. All of the Cpus in this review are designed for gamming unlike the one they are compairing in this terrible review and they all have stock clock speeds of 4.0 Ghz and have 8 threads.

https://www.youtube.com/watch?v=BDVcpAhegWs

There are always nay sayers

You must be an Intel fanboy “reviewer” that cannot accept the fact that a cheap and a 3yr old architecture AMD 8core CPU can matched an expensive and newer Intel 8core CPU.

THATS TRUE! they are sons of evil intel godzilla

When you make everything GPU bound you could of put in a i3 and seen the $120 i3 would of matched your $200 AMD chip…

yeah try running netflix or have you internet browser up as well as your streaming programs and media player i3 not gunna do that

my i-5 4690k does all that and more. hyperthreading is a joke.

Hyper threading is good for games that are CPU bound like GTA5, Crisys 3, City Skylines ect… Also great for multitacking plus you get a higher out of the box clock speed with a I7 4790K then a I7 4690K for example.

If you buy an 8 core intel (5960x) for gaming you are dumb as fuck, its not meant to be used for gaming.

Dumb people.

Exactly the only use for it when used for gaming is if you are running 3 or more top of the line Graphics cards and i mean top of the line where talking fury X, GTX980ti, or Titan X otherwise you will get shit performance in games with this in compairison to a I7 4790K or I7 6700K.

Majorpayne19. I purchased the flagship AMD Phenom II 965 with a Sapphire Radeon 5870 in 2010. This combination heats my house during the winter. Too hot. AMD was good for me back then. I am going to get a Skylake i-5 6600 or an i-7 Haswell 4790. AMD needs to do more work to keep hardware cool and quiet.

You could afford a Phenom 965 and a 5870 in 2010, but you couldn’t add 25$ more for an aftermarket cooler?

What I find funny is that he used SLI 970s. Come on, indulge us, for such an expensive CPU, you should be at least using 3x 980 TI or Titan Xes, maybe dual R9 295X2s.

OMG!!! How you stupid!!!!!!!!!!!!!!!!!!!! :))) 3X 980Ti or 2XR9 295X2??? First,SINGLE 295X2 crush min. 980Ti in SLI! 2X 295X2(=4 GPU) eat 4X 980Ti!!!! and second,on CPU can go any card,is no mater if is weak, but strong card on weak CPU…this is problem,or bottleneck if you know what is this 🙂 and yeah,9xx series is “great” card 😀 you cant play new Batman on 980!!! on LOW settings! and AMDs cards,run Batman without problem… 9xx series is SHIT! 7xx series is 3 times better… how much noobs is here!!! fucking fanboys! here is the facts and you still dont belive in this beacuse you are blind fucking fanboy!

That’s the point We’re meant to see the CPU bottleneck. Or are you saying we should just look and see “Yep, 30 FPS with every game, on every CPU ever made with 8 cores”?

Exactly, they made things GPU bound and did not stress the CPU’s much at all, this the results seem the same…

Lets start listing how many benchs show i3/i5 and i7 beating AMD in most every other game around….|

https://www.anandtech.com/show/8316/amds-5-ghz-turbo-cpu-in-retail-the-fx9590-and-asrock-990fx-extreme9-review/8

https://www.xbitlabs.com/articles/cpu/display/amd-fx-9590-9370_5.html#sect0

https://techreport.com/review/26977/intel-core-i7-5960x-processor-reviewed/5

https://www.techspot.com/review/875-intel-core-i7-5960x-haswell-e/page9.html

https://www.bit-tech.net/hardware/2014/08/29/intel-core-i7-5960x-review/10

In all Benchmarks my 8370E beats the i5, except for single core. My chip cost 160 euros, and the i5 4690k cost 240 euros. In all benchmarks, when overcooked, my chip comes in right between the i7 4770k/4790k and the i5 4690k (at the same clock speeds). Attachments were added to a comment reply to this buddy douche… You do realize that AMD has 95w TDP chips right? You like to show the 125w variants.

Don’t get me wrong, the Intel chip wins in single core, based on IPC (I also own a couple Intel based systems), but the AMD based systems I own are far from horrid. Were talking about performance that comes in right between the i5 and i7 chips, for the price of an i3 (yes I own an i3, which needs upgraded to a Xeon in my server)!

Your synthetic benches are not really showing how Intel wins.

As far as DX12 Radeon GPU’s gained up to 80% while the GTX lineup seems to have taken a hit in performance in most tests.

Add 2 980TIs to your 8370E and see how it will work.dad

You buying? I’m going more for the R9 390×2, it’s a lot cheaper, with similar affects.

https://youtu.be/H1pURs5ZJvw

That’s not SLi but every Intel fanboi will always state that AMD bottlenecks high end cards. That is 4k on the 980ti, with no bottleneck.

My guess is that you’ve never tried it. I must say 4k on PCIe2.0, is pretty impressive as it is.

Its all about preference. If prefer bang for you buck, go AMD… If you prefer to to spend double for a 5% increase in FPS, then go Intel.

Well I have two FURY’s (around 7% slower than 980ti on latest drivers) on my 8370 (@4.95GHz mind you) and pull 178FPS in Tomb Raider 2013 (ultimate settings), 125 FPS 1440P Ultimate settings and 66 FPS 4k once again at ultimate settings.

Well I have two FURY’s (around 7% slower than 980ti on latest drivers) on my 8370 (@4.95GHz mind you) and pull 178FPS in Tomb Raider 2013 (ultimate settings), 125 FPS 1440P Ultimate settings and 66 FPS 4k once again at ultimate settings.

Soo true.

And ewww, I do not want to fuck you, you sick loser.

how can single 295×2 crush 980Ti in sli? -_-. sorry for replying to a very old comment but its pretty clear that u don’t follow any game reviewing websites or even youtube videos where people compare between these gpus (like 290/290x vs 980/980ti etc). 295×2 is nothing but crossfire of 290s and it may give higher fps than a 980Ti but gaming performance will be better on that Ti or r9 furyx. so yes 2x 295×2 has no chance of beating 980Ti sli or r9 furyx crossfire setup cause 3 or 4 gpu scaling is pretty bad most of the time

295X2 vs 980Ti in SLI??? whaaat???? please,read something about 295X2!!! OMFG!!!!! 290 in crossfire is the same like 295X2????? boy,you are soooooo stupid!!! RETARD!!!! 290???? why not 2x 290X in crossfire??? even 2x 290X in crossfire is not strong like fucking BEAST,DOMINATOR OF GPU, R9 295X2! REATARD!!!!! And now become new KILLER card, FuryX X2…. dont look now benchmark,card is not realesed and bechmark witch show perfomance of this BEAST card is not true… check bencmark when card comes out… AMD is king of GPU,no doubt… Nvidia will never be ahead…

Easy there son. Its not the end of the World.

son? 🙂 omg…

“computers… it’s my job!”

followed by a stream of incoherent and insane rambling and ranting. the sad thing is people like this *really do* imagine themselves to be experts when in reality they truly appear to be in need of professional help and remedial education.

Truth

yeah,is my job… tjat,what you LAICS and fanboys saying… have you any proof??? I have a lot…

You clearly have no idea about what you are talking about and it is funny that people trust you with their computers.

yeah… I see what you GAYS know about this 🙂

Two GTX 780ti in SLI can beat the R9 295×2 and the GTX980 in SLI will completely demolish it.

https://www.youtube.com/watch?v=CZhC66yjROs

beacuse after 295 write this: X2!!!! and if is two card in SLI/Crossfire it does not mean that literally twice more stronger! what is wrong with you?? ex. if is card 4Gb and you put two card in SLI,you will not get 8Gb!!! Stay on 4Gb… you know that? no… so,if is two cards in SLI, is not twice more stronger.. is stronger but not even close double… OMFG!!!

This is not twice as strong either due to it being basically the same as crossfire also you are not really getting 8Gb of Vram with this unless the game is designed for Vram stacking by the devs and the API is either DX12,Mantle, or Vulkan even if your rig is incorrectly showing 8Gb your only really getting 4 in DX11.

what?????????????? :))))))) R9 295X2 is like two 290 in crossfire??? then,what is with 290X??? dude,computers is my job 😉

Then you are terrible at your job because the 295×2 is exactly the same as two 290X’s in crossfire.

https://www.youtube.com/watch?v=JEgsG_wl0kc

Very True

I am going to replace my ATI Sapphire Radeon 5870 with a GTX GeForce 960. How is the Radeon R9 290 / 390?

my Gigabyte Windforce R9 280X OC ver.2 is still faster and better than new 380X… I love AMD,but 3xx series is the same like 2xx,only have new chip but is not better… Only 390X is good and Fury X is amazing. the same thing is with Nvidia,9xx series is not better than 7xx series,even and some cards of 6xx, but like AMD, and Nvidia have one great card,980Ti… GTX 980Ti and Fury X is new card,all other cards is the same,or even slower, like old series (R9 2xx/GTX 7xx) and DX12… now all cards with GCN arhitecture support DX12! HD 7xxx,R7 2xx and R9 2xx… I love AMD but 3xx series (not 390X and Fury X) is very bad… :/ but,AMD have always AMAZING drivers,not like Nvidia for 9xx series! I dont hate Nvidia,but is the fact that Nvidia have terrible drivers… and for end,AMD is still on top,king of GPU (like Intel is king of CPU,for now,when come out “ZEN” 😀 bye,bye trone 😀 ) AMD always have flagship card! Now comes one more flagship card, 2X Fury X 😀 for now R9 295X2 is killer of all GPU…

better take 290.. 390 is very weak… 860.. no comment

shut up bitch

Exactly

I think the article was rather informative. From this an other research I’ve done I’ve found that while Intel does provide for the more powerful processors, on price point you’ll get about the same amount of capacity for your buck. The AMD 8 core may only perform as well as The Intel 6 core but they’ll generally have about the same cost. You could take the power route and go with the Intel 8 core for much more money, but since the software being released generally doesn’t come close to using the capabilities you’re basically buying a Ferrari to drive to the convenience store, you’ll never get to use the full capabilities of it.

because it is 8 core vs 8 core, you retard. people who compare an 8 core vs a quad core are just stupid.

The AMD FX8350 is not a True 8 core cpu it is more similar to a quad core with hyperthreading then anything although it is still worse actually because every two of the so called 8 cores shares a fetcher, decoder, FPU and cache where as with intel each core is a full fledged core and has hyperthreading since each core does not have to share its resouces with another you get higher single core performance and both have only 8 threads when comparing cpu’s with a I7 4790K for example which also has the same clock speed and 8 threads you will see that the AMD FX8350 will get its ass completely handed to it I know for a fact because I have owned and tested both.

https://www.youtube.com/watch?v=PgejkSWzvNs&list=PLyReHG5dDxXWxtuArwVgQkVAv6dppbbEp

Sounds about right. I’ve always favored AMD for its inexpensive, but good CPU’s. Was AMD/Nvidia (cpu/gpu) for a while now (and Intel/Nvidia before that) and am AMD/AMD lately after switching to an ATi 3870 on a lark just to see if it felt any different than running a Geforce (it didn’t), then I stuck with ATi when it got bought out by AMD.

To be honest, I feel that AMD’s always been competitive with Intel in terms of CPU’s in terms of price/performance at least, and the same thing with Nvidia. Personally, I just liked supporting AMD because I do not want Intel and Nvidia to become monopolies. Don’t get me wrong, I like Intel and especially Nvidia products, but I don’t trust any company when it’s a monopoly.

It really isn’t though, when you get down to performance Intel is always ahead, the main issues is people think more cores = better, which is not true. very very few games can efficiently even use more than 2-4 cores.

Intel is at a point that even i3’s are out performing 8 core AMD chips in gaming and day to day use.

Unless you have a specific application that can fully utilize 8 cores, Intel will almost always win out, even with less physical cores.

it really is. if you get down to performance, democrats are always better.

Intel and AMD are like Republicans and Democrats.

I would like to see tests using also AMD GPUS.

So much this. Their gpus are quite know to be less cpu efficient in DX11 mode.

This is especially apperent, when you couple low end cpus (like pentium or i3) with their midrange offering (like a 270).

There are bunch of tests proving that.

That would take way too much time. As it is now, I bet this took weeks.

Hope you follow up with a piece on AMD GPUs as they don’t have the PhysX support and that sometimes falls back to CPU to handle some of that load.

False…you can disable PhysX.

I normally don’t say this, but please state your justification for claiming that I am false in stating that AMD GPUs do not have native PhysX support.

You can disable PhysX enhanced features in games and in the Nvidia Control Panel… but you cannot eliminate the *physics* that are processed in gaming environments. The question is specific to physics processing in a GPU-neutral environment – no TressFX, no PhysX. It’s been long perceived that Nvidia has a natural advantage here because of the PhysX acquisition, I’d like to see that revisited is all.

Wow really? I didn’t say AMD’s have PhysX support. I said you can disable it. You are saying without a Nvidia card you are taxing the CPU with PhysX which is bullshit. Disable it.

PS: Gameworks PhysX =/= physics

Of course you can’t disable phyics that are a part of the game code. Nvidia Gameworks PhysX however is something you can disable.

Try it. Or better yet, read about it from someone other than me. https://www.reddit.com/r/pcgaming/comments/36o9rl/physx_to_cpu_getting_15_less_gpu_usage_on_same/

Have a good day sir.

No idea what you’re linking but I don’t give a crap.

^ Classic troll response when unwilling to accept or even entertain a logical argument that could easily prove his wrong.

Classic troll… Um, please go look up the meaning of the term.

I stated a fact. I don’t need to scroll through your stupid Reddit thread.

Yeah forreal, this guy is everywhere trying to hate on AMD. Wouldn’t mind at all if the site banned him from posting because he’s a grade A dick.

hahahahaha Speaking of trolls.

Nobody’s trolling. You’re just a grade A asshole who nobody would miss if you ended up banned from this site.

Hey there’s one of your anecdotal opinions!

You were the one arguing, I respond in kind and I’m a “troll”. Grow up 🙂 oh, and go do some research.

oh man this has been entertaining

but really, like I suggested above, do get a counselor. You’re clearly dealing with a lot of emotions/baggage and have no way to take it out other than the comments section of a tech website 🙁

I’m busted man. You are so clever.

My brother got an AMD card and can play with Hairworks and Physx enabled, actually he got better fps than me cos he can slow the Tesellation to x32 or x16, I have an Nvidia card and can’t change the default Teselletation x64, there is no difference between x64 and x32 and little with x16

Odd that they wouldn’t allow that, especially in the lower end cards. If it’s something that cannot be easily adjusted on the card – firmware or something low level – that would make sense. Hopefully they consider that for future cards.

That’s a good idea; I used nVidia GPUs simply because that is what I had on hand. Will definitely consider this in the future though.

O_o

Yeah, it is kinda hard to justify going over $200 for a gaming CPU. Especially if you aren’t going to spend on a 144hz or 120hz monitor. If you are like most people running 60hz all you need to do is get to a constant 60 fps and you’re golden. The most efficient way by far to get to that point is through the GPU.

Even with this comparison I think you’d be hard pressed to find an FX8320 that couldn’t reach 4.3-4.6ghz, you go that route, overclock, and you’d be set for under $150.

In a few cases, a AMD cpu will be thte cause of not even managing 60fps. They suck. I was on a fx-8350 for four years. Four years too long.

You cannot look at a single component as the sole factor. At the time of running the FX-8350 what RAM, GPU and disk were you running? Are you sure you didn’t have other bottlenecks in that configuration?

Pretty sure you don’t know what “bottleneck” even means. Do tell, what other “bottleneck” could explain low fps in cpu bound senarios? You are extremely ignorant if you’re going to argue the validity in stating AMD cpu’s get much much lower fps in cpu bound senarios, games or using cpu bound image quality settings. The fact Intel rapes AMD silly is common knowledge.

Here’s a example, I’ll leave it at just the one as I have better things to do: the steps of Whiterun ( Dragonsreach) in Skyrim looking down. A highly CPU bound spot. 30fps on fx-8350, even at 4.7ghz. On a 4790k at 4.6ghz over 50fps.

I mod Skyrim with over 250 mods but that’s irrelevant. The spot in Whiterun suffers the same fps drop modded or not.

You might think that’s not a big deal. I disagree and I’m glad to be rid of my old system.

He asked you your specs, not your opinion. If you’re running 250 mods on Skyrim, then GPU VRAM could easily be a bottleneck if you weren’t running something really high end.

Take your bullshit somewhere else. This place is for objective analysis, not “omg my opinion must be right based on anecdotes omg AMD suckz”

Oh jesus Christ grab a clue. Learn to read. Moded or not, on AMD cpu you will get 30 fps on the steps of Whiterun. On Intel over 50.

IT’S A CPU BOUND SPOT.

I’m not going to bother listing my specs or going into detail. It’s irrelevant.

Anecdotal my ass. I owned the piece of crap 8350 for 4 years.

I don’t need any more examples and if you are seriously arguing what I’m saying you’re completely clueless.

Don’t bother typing your long winded witty reply.

My bad buddy, I guess I nor the author nor anyone else know anything :/

Sorry for debating with your irrefutable presentation of facts and thoughtful analysis of why your games ran as so, and thank you for presenting proof to what you’re saying with screenshots or benchmarks of in-game performance like the review did. I’m definitely considering your point of view now.

You are seriously arguing AMD cpu’s are not inferior to Intels? Are you seriously still responding?

Don’t take my word for it smart ass, go do some research. You’re arguing facts. Intel cpu’s blow AMD’s out of the water.

Benchmarking games that aren’t cpu dependant doesn’t refute this.

No, actually, that’s common knowledge. What I am saying is that you are an ass in the way you respond to people you disagree with, in this case about whether or not the CPU is actually the bottleneck.

Without presenting any information that would lead a reasonable person to conclude that the CPU is indeed the bottleneck (which may as well be the case), you expect everyone who reads your anecdote (because that’s all it is – you have yet to reply with information that would definitely prove you right) to agree with you. Anyone who doesn’t is apparently uneducated and cannot read.

TL;DR No need to be an ass on a site where everyone loves tech. There are much politer and less exhausting ways to prove your point (and you’re right, the CPU is the bottleneck from your specs down below from a different thread) than to act patronizingly towards those who don’t immediately agree with you.

Really? I describe a CPU “bottleneck” or low fps due to a cpu that sucks at single threaded work loads and I get wanna be techs and wise asses that have the audacity to argue the fact AMD cpu’s blow ass, going off on wild tangents about ram frequency and HDD read speed. looooool

This article at best minimizes how awful AMD cpu’s are and at worst is deceiving it’s readers as it conflicts with just about every source of info available on the internet.

Oh and btw I have yet to respond with another example as I shouldn’t have to. Do you know how to Google? The entire internet will confirm what I’m saying. Get schooled.

Do you hear yourself? My comment wasn’t contesting what you said technically – I agreed the CPU is the bottleneck.

You still sound like an absolute dick. I don’t know if you’re compensating for something else, or acting tough behind your anonymity, but forreal, it gets tiring when wannabe tough guys like you keep posting.

It’s fine to say that AMD’s processor here isn’t as good as Intel’s processor here. No one cares that you’re doing that. The difference between you and most other people with that same opinion is that most other people would take the time to respond with a link or two (which really isn’t hard to find – no one on this sight is a tech noob, and that’s why they’re all here) and respond with a dissenting opinion that didn’t call all those who didn’t agree word for word wanna be techs or wise asses.

It’s not your opinion, but rather your attitude that’s really off-putting for someone who names them self buddydudeguy.

I’m not going to respond with a link or two when you are capable of Googling, which you obviously need to.

Holy shit. You really are incapable of comprehending anything except your own ego.

Lemme give it to you clearly: AMD processors are not as good as Intel processors when it comes to single threaded applications, and nobody here is disputing that.

What anyone here also agrees on is that you’re a righteous asshole.

you.

are.

an.

asshole.

see above

see above again

see above again if you still don’t get it

haha. Who’s the troll again?

Why in the heeeeeeeeeeeeeelll were you arguing other wise then going off on wild tangents?????

Rhetorical question. Please…please stop responding.

Is that really all you got? I honestly feel pity for you – if this is how you are on the internet, then you’re definitely the inverse in real life. Bullied, minimized, unimportant and ignored.

Please see a counselor :/ There’s a lot of stuff you clearly need to work out. You’re compensating, and it hurts me that you’re hurting 🙁

If you thik you’re under my skin TROLL, you’re mistaken 🙂 Have fun with…what ever the hell it is you do. Make up your mind.

You’re a complete and utter moron. It’s just common knowledge, I don’t even have to provide facts.

What is common knowledge? You don’t have to provide facts about what? Could you possibly make less sense?

If you’re being sarcastic in regards to my posts, yes…the fact that Intel sucks compared to AMD is common knowledge and I refuse to go Googling for any of you.

I think you’re right, at eveything you just said.

well played.

Stop with the troll crap. I get sick of reading comments by technology bigots who can’t see passed their own bias. I love my Intel gear too but not to the point where it’s the be all and end all, period.

Dude, you’re really an asshole, this opinion was based on your responses. He is not trolling, you’re doing the trolling here. Shit even a 3rd grader level of comprehension has slipped you, as you’re so egocentric, and narcissistic, to even accept his comments. Just because he doesn’t subscribe to your opinions!

I’m using an AMD FX9370 OC to 5.1 ghz with ram at around 1866mhz and two sapphire radeon R9 290’s in Xfire also using skyrim modded with 4K textures in 4k on my ASUS PB287Q and I have ZERO issues with the white run steps even at 4K ultra with 4K textures. Take your Intel fanboy faggottry out of here.

haha. Whatever you say. You’re so full of shit your eyes are brown. It’s not a “issue” it’s a cpu bottlenecked spot and you are not at 60fps looking down on the steps of Dragonsreach.

Do I have to record the game and show you? Are you that ignorant?

rofl! Do eet! I will show you footage of my 4790k wiping the flor with your fail ass FX.

I see your ignorance knows no bounds.

by what? 15 seconds and 20 fps or more? makes no difference. thats intel fanboys defense. since they feel bad for blowing so much money for 15 seconds or 20 or more fps. its the video card that does the work.

hahaha. Tell me that 20 more fps doesnt matter when you get 40 fps and I get 60. You’re in denial. Fail arse buyers remorse.

not in the games i play. as long as you can get to 70fps the games run fine for me and my AMD.

“it’s the video card that does the work” ya…you’re a idiot.

the cpu sends the info to the video card. that in the builders 101 guide, read it!

I have 2 8350s, one on air @4.6, one on an h100i@ 4.9. I also have a 4790k @ 4.8 on all cores, and a 5930k @ 4.6/ For anyone to claim the 8370 performs the same the 5960X, a $1000 chip with 16 threads is FUCKING RETARDED!! The FX chips weren’t even that good when they came out 5 years ago. After reading this smut, I ran a good amount of these benchmarks. Absolutely ZERO of them were even close to the results in the bullshit article. If you like AMD, thats fine, have at it. Buy to think that a chip with 5 year old architecture can stand toe to toe with whats right now the most powerful consumer grade chip on the market, you must have brain damage. My 8350 with 1 980 ti, 16 gb of ddr3 1866 on an Asus Sabertooth 990fx scored 10232 on Firestrike. All I did was swap out the chip and board for a 4790k and z97 mark 1 sabertooth and my score went up to 14968. Buddydudeguy, there’s no point arguing with with AMD fanboys, just as bad as arguing with console peasants. GET EDUCATED PEOPLE!

Thank you. For christ sake I was arguing with ignorance.

Well Buddydudeguy, I think we’re in the wrong place to expect intelligence. We’re only going to get ignorance unless we have a conversation. Someone asked me for proof of my “CLAIM” that the 5960x was a better chip than the 8370 and apparently the 5 links to REPUTABLE sites such as anandtech, oc3d, and linus tech tips wasn’t proof enounh. They want my benchmarks on the 5960x. A chip I clearly stated I don’t own. But, I’m still gonna bench the chips I have. Good day to you sir

Guys…don’t want to have a thread cleanup session. let’s stick to the context of the article and leave personal verbiage out of it shall we?

I wouldn’t eve provide “proof” that a 5960x is better than a 8370 lol. That’s not worth responding to.

Education is very important and part of being educated is understanding why the benchmark score is different when making comparisons . Much of the difference in scores in Firestrike’s default benchmark are due to the software giving the Intel chips a fairly large advantage in the way it makes use of the cores on the AMD chip. Run it again and pay attention to core usage on the AMD compared to the Intel , particularly during the combined test. What is funny to me is that a 4790k locked at it’s turbo frequency and a FX 8 core locked the 9590’s turbo frequency will score very similarly at firestrike extreme and the FX beats the 4790k ‘s overall score on firestrike ultra setting.

(both using a stock 290X). Another important point to understand is just how a synthetic benchmark applies to how the machine is going to normally be used.

Don’t reply to him, he will just flag your comments, and they’ll be removed. It seems pretty clear that its the buddy guy.

Same gpu and ram in both rigs. 🙂

Yes actually. I own 2 970s, and 2 980 ti. I own in total 64 gb of corsair vengeance pro ddr3 1866, and 32gb of corsair vengeance ddr3 1600. I use 16gb ddr3 1600 on all benchmarks. Lastly, I was not saying that amd sucks. I own both amd and intel chips. My main argument and issue with this article was the fact that all benchmarks across both chips were the same. Both over clocked and stock, there was no variations. Not that amd was the same as intel. I’m well aware that you get no major gains in gaming with a 1000 dollars chip. But under not conditions, except controlled to show the results you’re trying to show, will a stock 8370 perform no different that an over clocked 8370. Nor will a 50th perform the same at 3.0ghz than it’ll perform at 4.4ghz. That’s all I was trying to say. If people wanna believe that, more power to them. Who ever did these benchmarks should run cinebench r15 on both setups, then let’s see what they come up with

Go to ANY REPUTABLE source, anandtech, pcper.com, Linus tech tips, and compare these benchmarks. They will not hold up

Don’t waste your time.. he’s just here to crap on AMD.

some links for more inline intel vs amd more based on price vs $1k CPU’s

https://www.anandtech.com/show/8316/amds-5-ghz-turbo-cpu-in-retail-the-fx9590-and-asrock-990fx-extreme9-review/8

https://www.xbitlabs.com/articles/cpu/display/amd-fx-9590-9370_5.html#sect0

https://techreport.com/review/26977/intel-core-i7-5960x-processor-reviewed/5

https://www.techspot.com/review/875-intel-core-i7-5960x-haswell-e/page9.html

https://www.bit-tech.net/hardware/2014/08/29/intel-core-i7-5960x-review/10

Look man, I get it. CPU bound games, Intel > AMD in your opinion. And in 90% or greater of those cases it’s true and has been proven time and time again. But it doesn’t mean that every single person need avoid AMD APUs, even if they’re playing games.

Remove MMOs from the equation for the sake of argument. WoW, Skyrim, FFXIV, etc. as they will always be CPU bound, even on high end GPUs in SLI/Crossfire. That’s just the nature of the beast – has been ever since my EQ/SWG days. I don’t play a lot of physics-intensive games and many of the folks I build for play games like Minecraft, SC2 or MOBAs. The reason that I use Intel in my desktop is purely Android build times and other programming/development efforts.

It goes again back to basics: What do people use the PC for and what does the job best for them at their price level? What works for you will be different than me. And since everyone’s situation is different, why not discuss those and let others decide?

Wow, skyrim, FFXIV? Skyrim isn’t a MMO.

I’m trying to walk away but come on guys.

You should really go back to school, he never said Skyrim was MMO, he specifically stated that it’s “Physics intensive” and “single core dependent”. Dafuc is wrong with you, failed 3rd grade 20 times now?

Of course Intel CPUS are better, you’re going to spend $100 more on the chip and $30(used to be $100) more on the motherboard for that intel chip, it better be faster.

Right….?

I’m sorry were you making a point? In your other post you were saying how awesome your beast 8350 is.

You’re doing it wrong too, using the base clock..or do you have a fail ass non black edition?

I’m glad we’ve established Intel is better…wait I’m confused. Can all of you make your mind up? One min you’re arguing objective fact and the next your talking about how beast your 8350’s are and how wrong I am.

nah what everyone is saying is how you’re an asshole who is overcompensating by acting acting tough on the internet, cupcake.

Just read the thread and this comment says it all.

Way to behave like a prick in comments section. Everyone is talking to you like a human being, and the bile still foams at your mouth.

Gee Gee fanboy.

what GPU were you on?

Relax…It’s a CPU…

Just in case it wasn’t incredibly clear he’s making it up, I’d like to point out ALL FX8350s are black editions. The locked multipliers are gone on FX cpus.

all FX chips are unlocked moron!!! See there you have it, no clue from you!

The issue is the ALL CAPS replies at the start and the aggression, also people who lower them selves to name calling people usually won’t hold much ground because it just looks like they are throwing a pissy fit to try and get people to see what they see, and if usually never works.

You could of said all you said early on, that clock for clock Intel is better, and then providing links and proof. Most then argue back that they can get AMD 8 cores for $200, while an i5 is $200+ and you only get 4 cores.

So then you argue that the i5 will still beat the AMD [insert links] and even an i3 [insert links] can beat an AMD for reason XYZ.

Just learn to be less confrontational and i am sure many people here would of been agreeing with you from the start.

There are the hardcore AMD people and hardcore Intel people. People in the end need to learn that neither company gives 2 craps about you so support the product that you get the most performance from for your money, which in reality is Intel almost completely across the board with a few exception for CPU hungry applications that can utilize 8 cores for $200.

Im sorry you want to tell me for $200 you can build a better gaming pc with intel parts ? Bwhahahahahaha

Not really, most places now are selling 990 chipset boards for the same prices as new Intel boards, and remember that chipset is almost 4 years old with nothing new really going into it..

Also most people buy an amd 8 core thinking WOW i got 8 cores,when for gaming and most day to day usage and Intel i3 would perform just as well and in some cases faster, cooler and user less power.

If you’re comparing the 990FX to a cheap H87 board then sure, they’re the same price, but if you want to overclock like many gamers then you can go with a $60 AMD 970, whereas you’re going to have a hard time finding a decent z87/z97 board for anywhere near as cheap as that.

If we’re talking about JUST gaming and JUST right now, then sure there’s a couple decent i3’s that will perform just about as well in games as an 8350, but when DX12 and Vulkan get going in the next year that 8350 is going to smoke any i3, and if you’re talking about multithreaded apps it already does.

You’re right about the power though, the AMD solution will cost you at least $2 a month in power over the intel. On the other hand, if you’re like me and live somewhere that gets cold in the winter I end up saving money with AMD because the extra heat translates directly into less need to run the house heater.

Next I think we should discuss how much computers and CPUs in particular cost before Intel had any serious competitors, as well as every time they gained a significant lead in performance, and you can explain to me how supporting a company that price gouges every chance they get is a good thing, meanwhile I’ll be saving you hundreds of dollars on your next CPU purchase by helping to support their competitor.

Ok lets try this… What car would you rather have a Mitsubishi Evo or a Nissan GTR? The GTR is clearly better (Intel) but the Evo (AMD) does fine for half the price. I know perfectly well that Intel is faster, but refuse to pay the extra money for it because that little boost isn’t worth it IMO. And for the love of God reread the last paragraph if you have extra money buy Intel, but if your on a budget get an AMD

BTW both cars will get you pulled over at the same speed, so when your out on the track is the only place you’ll see your GTR (Intel) outperform. Nürburgring Lap times:: 2011 GTR -7.34 $68k :: 2006 evo 9 – 8.11 $33k. {yes I ignored the 2015 nismo GTR cause at double the cost of the regular GTR it’d be basically saying supercomputer@$150k and was designed totally with that track in mind}

To each their own, you should learn that value it an important part of things or why didn’t you even think to by DARPA’s 1THz chip? (I know not for sale to the public but proves the point)

“fine” is subjective. The FX’s result in substantially lower FPS in many cases. Numerous enough for me to call them jsut straight up bad compared to the alternative.

well said!

Haha know its not relevant but a modified evo will always be faster than a GTR around a track. There is no way around physics and unfortunately the EVO will always be much lighter than the GTR so evo will be much quicker as a track weapon. just my 2c its the same storry if you look at superbikes. 600cc bikes will demolish a hyabusa around a track. Hyabusa is to heavy although its faster in a straight line

Oh look another insecure troll. “Meh experience was bad. Wah intel legit m8s” GR8 B8 M8 i R8 8/8

I have an Fx 8350 (not overclocked) on an Asus M5A99X-EVO R2.0 paired with a Sapphire R9 280x Tri-x OC, Crucial Ballistix Elite ram 1866mhz CL 9-9-9-27 1T and i have 60 fps looking down the stairs in DragonsReach, at a resolution of 1920×1200. I don’t even have ssd, i own an pretty old WD Black Edition 640 gb, still good though.

FX 8 core ultra settings – Just stomping out ignorance 🙂 https://uploads.disquscdn.com/images/ef082f069c69f9a631c5559378318608c49803a67e98feb98babaf4649cf0b48.png

Unmodded you dense moron. BIG DEAL.

“anecdotal” lol I’m still chuckling. AMD’s inferiority is common knowledge not anecdotal.

Gee running out of vram hurts performance! I dudn’t kn0w dat!

lol you guys are hilarious. Youre right! I don’t get more fps in Skyrim with Intel, it’s all in my imagination. Must be my slow 1866 RAM too! We all know ram speed over 1600mhz matters? Wait..no it doesn’t.

You guys need to get learned.

Once again DDR3 compared to DDR4. Bandwidth of DDR3 2133mhz is 27,000mb/s and DDR4 is roughly double at 60,000 mb/s. Yes the bandwidth has a lot to do with performance.

https://www.micron.com/products/dram/ddr3-to-ddr4

https://www.corsair.com/en-us/blog/2014/september/ddr3_vs_ddr4_synthetic

Now stop talking out your arse!!!

i think Anandtech did a thorough review and testing of DDR3 ram speeds and literally found that anything over DDR3 1600,you will only notice if you run benchmarks all day long and no one will see a noticeable real world difference.

That’s not entirely true. RAM is only utilized, not used. For example, in games, utilizing more than 4-8gb of RAM is quite a feat. While utilizing up to xxxx amount while rendering. Yes there is a noticeable difference while rendering. Furthermore, the higher the RAM settings are, the more instructions per clock you see. I.e. 1600mhz uses 9cl, 1866mhz uses 10cl, 2133mhz and sometimes 2400mhz use 11cl.

rofl!!!

Low FPS in CPU bound scenarios can still see potential limitations in RAM and disk read/writes not being able to feed the necessary information to the CPU in a timely manner. It happens to me all the time when I’m doing Android builds, which is absolutely CPU intensive.

By the way, I’m curious – how DID you get that FX-8350 4 years ago? Part of the test group prior to its release?

Lesigh…

16gb of ddr3 1866 and a 250gb SSD. Happy? Graba fricken clue.

And har har you’re not only clueless but you’re a joker too!

What the fuck ever 3 years…4 years…I should have spent the extra $100 and gone Intel to begin with.

What part of I get this fps on this hardware and this fps on that hardware do you find hard to grasp?

Go ahead run Skyrim and go to the steps and look down. Your fps will be much higher on Intel.

Thank you. Depending on what GPU you had and how much it was relying on the CPU to pick up the slack, it is indeed possible for the CPU to be a choke point. In CPU intensive tasks, like building Android, the 8350 will build slower than an i7-4770/4790.

Everything has to be considered in trying to identify these issues. The SSD certainly wasn’t holding things up but that RAM could have been a factor, based on my own experiences going from DDR3-1600 to 2400. Curious what you’re running now, if you’re willing to share.

Do you honestly think you’re teaching me something with your responses? You’re darn straight in cpu intensive tasks ( namely cpu bound games) AMD’s choke. There is nothing to ” identify” and you’re arguing objective fact.

I’m not some noob that using low end parts buys prebuilts and/ or doesn’t know these things.

I object to this article cuz it uses fairly GPU bound games and it leaves out key facts…like in a few games your fps will be abysmal if you’re on a AMD cpu. 20-50% less fps just because you chose AMD for your CPU is nothing to scoff at.

Don’t know. But this back and forth might answer questions from another reader.

In EVERYONES gaming situation, if the scenario /game is cpu bound , your fps will be abysmall compared to Intel. It is perfectly valid.

The problem with that argument is that not everyone plays CPU bound games. What would you recommend for a kid playing Minecraft, for example?

I said if the scenario /game is cpu bound. Logically if it’s not then your choice of cpu doesn’t matter.

A lot of the time AMD cpu’s do fine. The rest of the time they suffer. It’s the rest of the time that bothers me and the rest of the time happens often enough to matter. If you’re broke ass, then by all means use a AMD CPU/ mobo. Just don’t come here arguing, asking what my HDD is or what speed DDR3 I’m using when it’s said how bad AMD cpu’s are in cpu bound scenarios. It’s way off tangent. If you know darn well AMD cpu’s are relatively bad, then why argue in the first place.

Every single response from you is either common sense or off on a tangent where you could have accepted it as a simple example of AMD’s horrible single threaded performance /per core performance.

And your example is terrible. Minecraft is CPU bound. I don’t play it and I don’t see the appeal but at least I know that much.

If you want a good experience with it get the best cpu you can afford. For a kid playing minecraft or any other game for that matter…of course you go for least expensive while adequate.

I’m not even sure what you’re saying any more.

Definitely not an AMD CPU at the moment. Minecraft does not handle multithreading at all. The G3258 would perform just as well as an 8350 in that scenario. Actually both are quite close, the 3258 pulls more fps but is less stable, while the 8350 is consistent and only has frame dips once in a while.

Minecraft doesn’t need to be multithreaded you can run Minecraft maxed out on a cheap APU from AMD. People have this misconception on games like minecraft because its basically single threaded it must require an Intel. If you look at frame times on a Pentium they are pretty crap on games like GTA 5 even if they get high FPS the stuttering is abysmal which is why you need basically an Athlon 860k or an i3-4150 or higher to handle most games decently anything above the i3 or 860k is pretty miniscule for most games.

Well, a good CPU and a HDD since rendering chunks is very CPU intensive.

Try playing minecraft on a AMD A8-6xxx and then on a Intel C2Q 8200. The intel one will win, while its 6 years older but 1.5x faster.

Odd that you’re saying things in the comments of an article that completely contradicts the things you’re saying, looks like every game in this article manages 60 fps on AMD.

It’s ok, the majority of the knowledgeable people have already realized you don’t know what you’re talking about the moment you said that games are “cpu intensive tasks” when the reality is there are almost no games that will use more than 2-3 cores. You’re wrong about that, you’re wrong about how long you had an AMD cpu, you’re wrong about them not being able to hit 60 fps, and you’re COMPLETELY wrong about your numbers, there is no 20-50% difference in FPS on AMD vs Intel in gaming period, more like 2-10% with the majority trending toward the 2% end.

Dunno, personally I get 60 fps in everything because I overclocked my 8350 by upping the FSB to 220 and jacking the RAM speed through the roof, which is just yet another thing you’re wrong about with regards to your PS statement.

Ya you’re right. AMD cpu’s are great, not a joke compared to Intel at all. Totally man! 60 fps in any game!

The entire internet is wrong and you’re right!

Keep on sniffing that glue man. Keep on keep on.

lol you think ram speed matters for gaming. I got better things to do that type at clowns like you.

Lol here you just saw an 8370 keep up with the best intel chip on the market. Yet you still argue. I am running a 8320 at 4.6ghz with a 670 gtx dcii edition and my fiernd has the 4th gen 4790k with the 680 gtx dcii. Both using 2100mhz ram and samsung evo ssd and we get almost identical frame rates with 2 to 5 fps difference in games. Just because you have moded a game with badly designed software and textures or coding that favours one cpu over the other does not mean that the one cpu your mods were not optimised for is bad. But everyone can see you are a fanboy for intel and thats your opinion you are entitled to. Just dont post utter bs and knock things You dont like. Posting misleading BS because you are a fanboy causes people to get annoyed with you. No one is convincing you to buy an amd buy what you want just dont talk nonsense to justify your choices of cpu

with GPU limited testing.. now toss in real high end cards to go with that high end CPU.. who would buy a $1k CPU and toss in some 960’s and 970’s… no one would if they bought the $1k GPU for gaming.

and to note, anyone who buys a $1k CPu for gaming is not too bright either, unless they plan on a tri SLI/Xfire config with high end cards.

As noted, they could of tested with an i3 as well and gotten the same results vs AMD…..so now should we defend the intel i3, since it is faster in the end for gaming vs AMD for almost every game they tested..

hahaha!!! There are LOTS of examples out there with 20-50% more fps on Intel. I’m not going to google it for you. Have fun with your “FSB” NOOB. And I am very very right about ram speed. Are you on a APU? Then RAM speed does matter. If not, you’re way out fo your element and don’t know what you’re talking about. You’re lucky if you get 2fps more with faster RAM.

haha!!!

Are you on a APU? ??? HE SAID HE WAS ON A 8350!?!? something is telling me that you are some little 12 year old defending intel right now..

Get… a grip.. geez

Are you on a APU? ??? HE SAID HE WAS ON A 8350!?!? something is telling me that you are some little 12 year old defending intel right now.

haha!! Not even going to reply to you.

My question was rhetorical dumb sh9t. The only time RAM speed matters like…AT ALL is on a APU.

Why are you so rude? What is the point of being a prick? He is simply stating what he stated, he asked you about your hardware, and you give him lip! Instead of just trying to find the problem, and accept his help. You seem to be an Intel FanBoi, nothing more. My bet is that it isn’t the CPU, rather the PCIE lanes. Go figure that you see a difference between PCI 2.0 and PCI 3.0, Also go figure that you see a difference in DDR4 as compared to DDR3, let alone dual channel DD3 and quad channel DDR4, I mean the bandwidth is faster. Stop being so ignorant, his benchmarks are along the lines of other benchmarks done. It can even come down to what chipset you were using ()my bet is the 970 chipset, as you bought the FX chip at it’s release). I tend to agree with the other people here, you have absolutely no clue about what you’re saying.

You are half right on this one, Skyrim only taxes one core and AMD CPUs are terrible on single threaded scenarios.

Have you tried meditation?

Fx8350 isn’t that much old.

Me waiting for zen.

Um..yes it is.

Intel have been through…how many revisions while AMD has done nothing but release a overclocked and higher TDP 8350 ( the 9590) It’s been a number of years. Stay away from AMD until ZEN. Their CPU’s suck.

Thank you! Some one with common sense

You’re right this test is bullshit and faked by using gpu bound scenarios and subpar GPUs (1440p using 970? That gpu can barely manage ultra on 1080p…).

pretty much, they do not strain the CPU, toss in 2 /3 cards in SLI/XFire and use a 980ti or Titan or some 290X’s or duals…then see where Intel will shine , a $1k CPU is not bought for a gaming rig to use 960’s and 970’s in…

Then trim the intel back to the $300 range CPU’s and compare those to the $1k and see the same results..

Then toss in i5 and i3 and really start to see a CPU bound problem but likely keep close to the AMD…

Skyrim is absolutely atrocious on CPUs and strongly favors AMD. Also “looking down” isn’t really relevant, although I understand why you would test that way.

Also the reason WHY AMD performs so poorly in skyrim is because Bethesda has always had horrible optimization. i3’s and pentium dual cores run it just as well because they didn’t bother to include any optimizations for anything newer than the P4 architecture.

As a former owner FX 6 core Skyrim ran pretty shitty for me too. But the big CPU bound games that are dual threaded, (RTS’s MMORPG’s) ran well, although the i7 3770K I got performed better about 20ish percent I didn’t see such a huge jump that you are explaining.

Donny mentioned several times in this article that there ARE games that Intel will run better with, but most games will run just fine on AMD.

If you play a lot of Skyrim I can understand your issues, but that is on the hands on Bethesda and not AMD’s, I’ve put hundreds of hours into Fallout 3, New Vegas as well as Skyrim on PC and I will be the first to tell you they aren’t polished at all.

You should learn how to articulate your arguments without being so abrasive.

So I may be ignorant too “30fps on fx-8350, even at 4.7ghz. On a 4790k at 4.6ghz over 50fps.” So a cpu that cost 320 vs one that cost 170 is better? This sort of thing is what the article was showing. It says Intels cards are faster, but are they worth it for most gamers like people think?

(sorry for the late to the party comment, this article just showed up on my feed”)

IMO yes the investment is worth it. By the time I ditched my fx-8350 I was down right sick of the lousy performance. I’m loving my 4790k and wish I just went Intel to begin with at that time. As for your question…. thing is so many people see higher fps on intel and think that means FX’s “bottleneck” gpu’s. Fact is most people misuse the term and shouldnt bloody use it. More fps on intel is always due to superior IPS /core performance in CPU bound scenarios. Anything that’s GPU bound, a FX cpu will not “bottleneck”. More fps on intel in this area of a game or this or that game simply means the game is more CPU dependent and favors intels superior IPS .

On the other end of the spectrum, noobs flat out deny that intel is better in any substantial or meanignful way. They are flat out wrong and kidding them selves. Granted in perfectly optimized games + games that arent very CPU dependant…FX’s do fine! The rest of the time they suck. Case in point…Skyrim. You get A LOT better performance on intel with Skyrim….or any game that likes a good CPU for that matter.

AMD’s current cpu’s SUUUUUUCK.

“Granted in perfectly optimized games + games that arent very CPU dependant…FX’s do fine!” Since this is my point I will agree with what you told me and Let you bug that other guy with the bottleneck comment.

Problem is, there are many cases…games, parts of a game whatever that are cpu dependant and run much better on intel. The list of games that are purely GPU bound and CPU doesn’t matter is really rather small. Even then “fine” is relative and subjective. “her der 20fps isn’t a big deal” they say…when you see 40 fps and could be getting 60, ya it is a big deal.

i dont run at 1080p. my 720p serves me just fine with my AMD. the 20-30% intel fps does not justify a overcharge to the consumer. intel plays you guys like morons and you fall for it. last the games i play dont need a high frame rate.

FX 8 core/780ti skyrim at 300fps https://uploads.disquscdn.com/images/d5425da43ff22a91f23656a3f209ca0114b3cb1a8bb4b56574ec4cb5684c6829.png

AHAHAHA. Have fun with thte broken physics. Who is thte f8ck plays Skyrim without ipresetinterval on? You’re doing it wrong and missed the point ENTIRELY.

You disabled ipresetinterval, play with no mods and take a screen shot with nothing happening ( let alone the stairs looking down at Whiterun) and think you’re proving me wrong …I’m still chuckling.

Is that why you replied twice 😉 ?

Most of the time I’m in the 100 to 120 fps range with vsync disabled. I believe 72 is the lowest I saw while in game.

With Vsync enabled I averaged 59.8 fps on ultra settings.

Post a screenshot of the exact location you are referring to along with your graphics settings, I’d be interested in seeing what you mean ( fps counter would be good too).

with no mods. Wow what a feat. And you do not get 72fps on the steps of Dragonsreach looking down at whiterun 🙂

oh my goodness, look what we have here 🙂

Enjoy the screwy physics bugs with high fps btw. You’re a noob. You don’t even know what I’m talking about when I say the steps of Dragonsreach..

Now run along, go install texture mods, dyndolod Verdanty grass and ENB and tell me you get 120fps and 72 being your lowest.

I was most interested in comparing at the exact spot you seem to be obsessed with. But I can see you are more interested in making inflammatory remarks than actually learning anything. It seems like you are very insecure and your ego couldn’t accept anything that threatens your self made illusion of superiority.

Ya das totally it dood.

You didn’t seem very happy about me disabling vsync so I re-enabled it. Doesn’t seem to matter where I look, pretty much locked at 60fps. Here’s the settings etc.

You’re schooling me kid.

Between you and me, I’m 50 years old 😉

That was sarcasm genius. You haven’t schooled squat and you have completely missed the point while babbling about fps that is nether here nor there and proves nothing. I don’t care if you’re 90.

One of us knows exactly what he is talking about, and posts proof , the other one just talks a lot. 😉

proof of what??? You are so off tangent it’s hilarious.

I’m gonna spell it out real plain and simple for you. Not to mention repeat my self…. Follow the STEP guide for mods. On top of that get Verdant grass and a ENB of your choice. Now run along, do this and tell me you get 120fps. In fact I want to see a screen shot. I don’t give a flying crap what fps you get with vanilla Skyrim. No one does. It’s not demanding. In any case the steps of Dragonsreach is indeed a CPU bottlenecked spot and you will in fact get really bad fps looking down the steps at Whiterun modded or not. And for the bloody tenth time, you will get worse fps there on AMD then on Intel. Seriously grab a clue. You’re boring and annoying with your vanilla screen shots. Who the hell plays Skyrim with no mods to fix how arse ugly and dated it is.

I love your noob screen shots of your noob settings too. 8x MSAA. lmao. First off who the frack uses 8x MSAA. 8x is well passed diminishing returns and hurts your fps. Second by that screen shot alone I can tell you play Skyrim without ENB as you disable in game AA and AF and use ENB’s. Vanilla, unmodded Skyrim is nothing to brag about and is really really bad ammo to bring for a argument. You’re 50 years old? You come off like a teenager totally new to PC arguing with me and posting your noob arse vanilla screen shots.

It would appear that you are the one that is running short on “ammo” as I have posted proof that you are mistaken about the capabilities of the FX 8 core. I understand that given the same clockspeed on a game that uses basically one core the Intel will give higher frame rates. However, the FX’s can be clocked up to a point where you cannot see a difference between it and a 4790K.

As for being a computer “noob” – well I cut my virtual teeth on a Commodore vic-20 back in the early 1980’s and have been building my own machines now for about 15 yrs. I have , within an arms-length of my desk , 2 FX 8 core rigs , an opteron 180, a 4790K running 4.9ghz ,2600k@4.5 , 3770k @4.7 , all on watercooling. I compare them against each almost daily. Approximately 25 other cpu’s are strewn about just waiting to be played with. I’ve been in the top 100 of the enthusiasts league over at HWBOT ( out of around 50,000).

I don’t have any particular stake in the discussion other than I don’t like ignorance ( my own or others) and if someone makes a claim that is contrary to what I believe , I respond with curiosity rather than anger and seek the answers for myself.

I have the feeling that no matter I post , you will have a problem with it , if it shows the truth to be anything other than what you want it to be.

You got the downs syndrome man. Stop posting. You posted saying how much fps you get with NO MODS for christ sakes. Who the f8ck cares!!! Vanilla Skyrim isnt demanding! What ever fps you get you will get more with intel! WTF part of that don’t you grasp? Namely…the steps of dragonsreach looking down at whiterun…your fps plummet on AMD. Not so much on intel. Or any other specifically CPU bound spot. You’re an idiot and I’m sick of getting notices that you brain farted again and posted. Grab a god damn clue.

lol skim read more of your insistent babbling..enthusiasts league! LOL with a AMD proc. You’re a clown. Stop posting. If I could ignore or mute you I would. Doesnt seem to be a option.

You seem upset, It might be healthy for your to admit you were wrong, you’re only human after all. Could be very liberating- not worth losing sleep over. Be well :).

https://uploads.disquscdn.com/images/09d6416db6040c39af32814c7af9c6c17fd47df37f00b9bc04bf7fef31044526.jpg https://uploads.disquscdn.com/images/72e80b429ab0004bdf7bc7d96087880a0a621bab42ef20f77159f828664f1a9b.jpg

I’ve been working 60 + hour weeks , so I haven’t been playing very much at the bot lately, so I’ve lost some places in the enthusiast’s league.

Stop derping, you make my brain hurt.

Well, at least it’s a small hurt 🙂

I’m not the one arguing how awesome sauce AMD fx’s are. You’re fking dense.

No , I simply speak the truth. You either don’t know what you are talking about , or are just trying to illicit a response through childish trolling. To recap, you have been spouting that the FX is terrible in games like skyrim. In response I posted a screen shot of my FX rig pumping out 300 FPS in skyrim. You weren’t happy about where I took the SS or the fact that I had disabled vsync. So I posted a SS of my FX rig on skyrim with vsync enabled at the spot you suggested , maxxing out the frame rate at 60FPS. You doubted that I get 72 fps in that spot with the FX rig, So I disabled vsync again and promptly posted a SS showing ….. 72 fps on those steps you are obsessed with. Just countering ignorance with the truth.

haha. You’re right, AMD fx’s are awesome.

https://www.gamersnexus.net/game-bench/2182-fallout-4-cpu-benchmark-huge-performance-difference

AMD cpu’s get smoked. SMOOOOKED. Look at that crap. Sub 60fps. Intel at 83.

You’re an idiot.

Sigh, did you even take a look at what the “reviewer” did there?

He put the 9590 on a 970 board, which is a handicap, and the board he used, does not even officially support the that cpu.

Steve has lost a lot of credibility in my eyes. Don’t blindly accept information people present to you… they quite often are 1. inept or 2. pushing an agenda. Eventually I’ll get fallout 4 and run the benchmark for myself, I will be very surprised if I can’t come very close to the numbers the 4790K put up in that review even given the fact his graphics card is better than mine Either way, when I do, I’ll post the results here. Do you happen to have the game?

“sigh” is right…you continue to argue AMD’s current cpu’s are on the same level as Intel’s. You sir….take the cake.

That’s very contentious because in some applications they are , others they are not. Generally, their AMD’s cpu performance supports the pricepoint’s at which they are being sold. The problem I have is when people who don’t know what they are talking about say things that aren’t true, for whatever reason. I am fortunate enough to be able to own several examples of cpu’s from each company , I have a good understanding of how they compare against each other. I share that when I think it would be helpful. They are what they are , I’d happily run any thing people request on my FX machine for comparison’s sake . The important thing to understand before buying your hardware is , will it do what I need it to do at a level to the degree necessary to make you a satisfied customer. If you need a screwdriver, don’t waste your money buying a hammer. Related note : Always be skeptical of numbers someone presents you…. ALWAYS!

So what do you think my FX 8 core with the 780ti rig will get for FPS at in Fallout 4 at the settings the fellow in that extremely questionable review had? I mean he had the 9590 giving a lower minimum frame rate than one clocked 1.4 ghz slower. Common sense dictates that the benchmark was botched,

( most likely because he used a cpu that isn’t supported by the motherboard….completely irresponsible ) the kicker is he went on to say ” it stuttered like amds tend to do”, absolutely false and if he actually looked at his data and was responsible about it, he would have known it was a mistake.

In running the 4790k and FX 8 cores side by side for most of a year, the 4790k locked at its turbo speed of 4.4ghz and an FX locked at the 9590 turbo speed in the overwhelming majority of games out there, you won’t be able to see a difference between the them .

the human brain cant process anything over 65 fps. so what difference does it make if an intel does 83fps. you wont see it. intel knows this but still pushes higher fps to idiots who don’t realize that.

What games, that is something most people fail to mention.

That’s interesting, seeing as how the FX-8350 hasn’t even been out for three full years yet, let alone four.

How is that possible when the FX 8350 was released on October 2012? The CPU is not even 3 years old yet.

I own multiple rigs and technologies including AMD gear (both CPU & CPU) and find their 8 core CPUs to be more than fine for high performance gaming.

because your likely GPU bound, not CPU bound, if your gaming at 1080, an i3/i5 would also been fine for most games for most people.

What a stupid comparison. AMD’s FX’s suck. Why are they using a select few games that are not CPU bound to do this comparison?? It doesn’t even show how bad AMD CPU’s in fact are.

Get a life….

LOL, Intel got their HTT from AMD’s SMT, that was in the Athlon 64. Athlon 64 tore Intel apart, from within. Zen is supposed to reintroduce the SMT, and come out with chips similar to Intel I.e. 18 core 36 thread Server chips, 8 core 16 thread workstation chips, and 4 core 8 thread consumer chips. Don’t put it past AMD to take over again, and if I remember correctly, didn’t Intel just lose 2 law suites, one against AMD, and one because Intel stacked the data on the P4’s synthetic benchmarks? You’re just a hater, spewing nonsense. It’s noticeable by the lack of knowledge that you repeatedly post!

I don’t need a history lesson and the Athlon 64 days were some 10 years

ago. WTF is your fail ass point? We’re talking about NOW not hopefully

some day soon. Try again 🙁

Every single thing I’ve posted is a fact. And you come here babbling about the Athlon 64 hahahaha. A hater/ Spewing nonsense?? AMD’s current chips SUCK. It’s a fact you need to face moron.

It states it in my comment, that the Zen core is supposed to return to SMT and leave CMT. No one is hating on Intel, it’s me simply saying that AMD acceled at SMT back in the day, and took Intel because of it.

I’m running a FX 8370E, I have no problems with it. You had a problem with your chip, because you don’t have any idea about how computers work. I OC’d my chip, it scores right below the 4790K in ALL synthetic benchmarks.

AMD’s best chip right now sells for less than an i5, and outperforms it. Intel i5’s are great for gaming, so is FX chips. I don’t know where you got that FX can’t play Skyrim, mine works great for it. My single core score is 115 in Cinebench R15, hell even in CPU-Z new software, they offer a benchmark. Which h the i7 4790K scores 10% better, or 600 points better than my FX CPU. In multi tasking the i7 4790k loses to my chip, by 12% or 1,100 points.

You are the hater here, as none here, seem to have had such major issues as you have. We also didn’t need to falsify our stories. We also knew that all FX chips were unlocked (you claimed certain 8350’s weren’t BE) Yes RAM speeds and subsequent latency caused therefrom cause differences in the system.

As stated before in comments, you have no clue about computers, you’re just rude to anyone who disagrees that the money you spent on the Intel system was justifiable.

I own 2 AMD based computers, 2 Intel based laptops, and 1 Intel based server. I mean we can talk about my consoles if you want also! I’m far from a fanboi, and I’m all but a hater. I have no problems with either of the manufactures, outside of me trying to use my FX on a 760g chipset board (which I mentioned above).

I don’t believe you owned an AMD system, and if you did, your configuration was trash!

https://uploads.disquscdn.com/images/3fc847e6bfa55554c56cef3f2bed579b58eab292d4866fea86929ef623906011.png https://uploads.disquscdn.com/images/c18c395ba3a8d998ef9b3d8d474a59be75d4e3124b2692da900a257d0b87f003.png https://uploads.disquscdn.com/images/c9a0687f53682f8fab9e41c7ea1b6d5aa6188929b0e9e6991edc87699c7f69be.png https://uploads.disquscdn.com/images/bcdfdc9117a8b8b1d806e2e3c088b0cdb7821a3851ceaa014e06700e02589dec.png https://uploads.disquscdn.com/images/46f2e5a9a2cdeb8a8f98c0ca2f7a2139792032f85d7108707d79342ff0444ee4.png https://uploads.disquscdn.com/images/7ec6fc2c2a12dad025ce997bc8f73cbe8959fe9f4c0ce8530f22af9111ff02a1.png

Lets put CPU’s against more in the price range and be more realistic vs the article:

https://www.anandtech.com/show/8316/amds-5-ghz-turbo-cpu-in-retail-the-fx9590-and-asrock-990fx-extreme9-review/8

https://www.xbitlabs.com/articles/cpu/display/amd-fx-9590-9370_5.html#sect0

https://techreport.com/review/26977/intel-core-i7-5960x-processor-reviewed/5

https://www.techspot.com/review/875-intel-core-i7-5960x-haswell-e/page9.html

https://www.bit-tech.net/hardware/2014/08/29/intel-core-i7-5960x-review/10

The thing is, is that the FX chips falls between the i3 and i5 price range. Comparing a $160 FX chip to a $360 I7 chip, still doesn’t have anything price related. The I5 only beats the FX in single threaded, and the I3 barely beats it in single threaded. With DX12 and API overhead, you can forget that the I5 will beat the FX chip in any game which uses DX12.

It will be 1-2 years before DX12 is main stream anyways, which by then new cpu’s will be out (hopefully something worth getting excited over from AMD), hoping something may be better in the future is not a good way to use performance measuring now. single threaded the i3 and i5 can beat the FX, in many tasks. Unless you can get something that can use 6+ cores properly, the Intel will almost always win in almost everything with multiple threads as well, just review most of the benchmarks and tests around, you can spend almost the same with an i5, use less heat, less power, use the stock cooler and beat out the AMD 8 core.. because simple fact is, very very few games can efficiently use more than even 4 cores.

Games on the XBOne are already using DX12 patches. 99.9% of games on the PC are ported from consoles. PS4 and XBOne both use AMD 8 core APU’s. Previous consoles used 3 core CPU’s, too which the titles were ported to PC. If you have FireStrike on 3DMark, you will see how the API overhead test plays out, between AMD and Intel. Intel has had a rough time, paired with Nvidia chips in DX12, while AMD hasn’t (I figure it’s because DX12 basically copied Mantle). Go ahead and buy yourself a dual core chip (Pentium or I3), or even a quad core chip (4690k), for gaming, because within 2-3 years, you’re going to be upgrading. It’s as simple as future proofing, which AMD has been doing with the advent of HBM, HSA, and the new Memory in their Zen Chips.

“Unless you can get something that can use 6+ cores properly, the Intel will almost always win in almost everything”

In video rendering, there is relatively no difference between 4c/8t chips (FX 8xxx and Intel I7 4xxx). Even though Cinebench says there is an almost 200 point difference, real world testing shows nothing more than a couple of seconds to a couple of minutes (nothing exceeding 5 minutes)!

BTW you haven’t posted any facts, you’ve flammed every chance you’ve gotten. Fact is all FX 4000+ series are unlocked cores, you stated otherwise. Fact is, there is a difference between RAM speeds and latency, you stated there wasn’t. Fact is, the FX 8350 cane out in 2012, you stated 2011. Fact is, there is no more than 10 fps difference, even in skyrim between Intel and AMD cpu’s, you stated a min of 20 difference.. Fact is, you don’t seem to understand anything you’re going on about.

On the other hand, I’ve posted benchmarks, resources to RAM speeds and timings, and manufactures websites. You’ve lipped off in every comment you’ve posted, yet references given, have yet to be posted by you. Your opinion does not equal facts! Got that yet?

I knew the results as soon as I clicked this

Also, isn’t this pretty useless? Graphics cards are going to do most of the work – these high-end CPUs are for actual processing work. They aren’t designed to display some fancy graphics, that’s what other components are for :s A “gaming” CPU is something mid-range, just enough so it can handle everything decently. The GPUs handle the rest

Too blanket a statement. In THESE particular games they tested, they are fairly GPU bound and CPU choice makes little different. I used this justification for a long time using a AMD CPU for gaming. The rest of the time, you’re wishing you were on Intel.

Actually GTA V is a fairly CPU bound game. https://www.techspot.com/review/991-gta-5-pc-benchmarks/page6.html

https://youtu.be/0638xSR4sMQ